Tianyu Xu

Enhancing XR Auditory Realism via Multimodal Scene-Aware Acoustic Rendering

Nov 14, 2025

Abstract:In Extended Reality (XR), rendering sound that accurately simulates real-world acoustics is pivotal in creating lifelike and believable virtual experiences. However, existing XR spatial audio rendering methods often struggle with real-time adaptation to diverse physical scenes, causing a sensory mismatch between visual and auditory cues that disrupts user immersion. To address this, we introduce SAMOSA, a novel on-device system that renders spatially accurate sound by dynamically adapting to its physical environment. SAMOSA leverages a synergistic multimodal scene representation by fusing real-time estimations of room geometry, surface materials, and semantic-driven acoustic context. This rich representation then enables efficient acoustic calibration via scene priors, allowing the system to synthesize a highly realistic Room Impulse Response (RIR). We validate our system through technical evaluation using acoustic metrics for RIR synthesis across various room configurations and sound types, alongside an expert evaluation (N=12). Evaluation results demonstrate SAMOSA's feasibility and efficacy in enhancing XR auditory realism.

Steerable Chatbots: Personalizing LLMs with Preference-Based Activation Steering

May 07, 2025Abstract:As large language models (LLMs) improve in their capacity to serve as personal AI assistants, their ability to output uniquely tailored, personalized responses that align with the soft preferences of their users is essential for enhancing user satisfaction and retention. However, untrained lay users have poor prompt specification abilities and often struggle with conveying their latent preferences to AI assistants. To address this, we leverage activation steering to guide LLMs to align with interpretable preference dimensions during inference. In contrast to memory-based personalization methods that require longer user history, steering is extremely lightweight and can be easily controlled by the user via an linear strength factor. We embed steering into three different interactive chatbot interfaces and conduct a within-subjects user study (n=14) to investigate how end users prefer to personalize their conversations. The results demonstrate the effectiveness of preference-based steering for aligning real-world conversations with hidden user preferences, and highlight further insights on how diverse values around control, usability, and transparency lead users to prefer different interfaces.

AcL: Action Learner for Fault-Tolerant Quadruped Locomotion Control

Mar 27, 2025

Abstract:Quadrupedal robots can learn versatile locomotion skills but remain vulnerable when one or more joints lose power. In contrast, dogs and cats can adopt limping gaits when injured, demonstrating their remarkable ability to adapt to physical conditions. Inspired by such adaptability, this paper presents Action Learner (AcL), a novel teacher-student reinforcement learning framework that enables quadrupeds to autonomously adapt their gait for stable walking under multiple joint faults. Unlike conventional teacher-student approaches that enforce strict imitation, AcL leverages teacher policies to generate style rewards, guiding the student policy without requiring precise replication. We train multiple teacher policies, each corresponding to a different fault condition, and subsequently distill them into a single student policy with an encoder-decoder architecture. While prior works primarily address single-joint faults, AcL enables quadrupeds to walk with up to four faulty joints across one or two legs, autonomously switching between different limping gaits when faults occur. We validate AcL on a real Go2 quadruped robot under single- and double-joint faults, demonstrating fault-tolerant, stable walking, smooth gait transitions between normal and lamb gaits, and robustness against external disturbances.

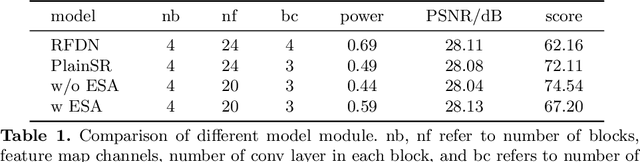

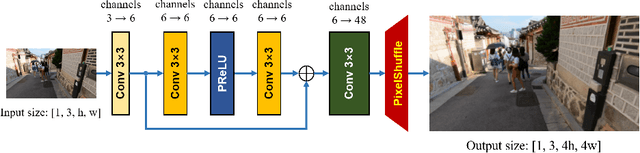

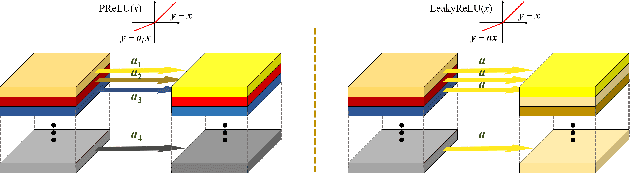

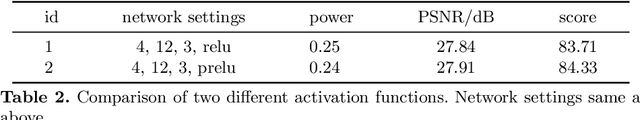

Power Efficient Video Super-Resolution on Mobile NPUs with Deep Learning, Mobile AI & AIM 2022 challenge: Report

Nov 07, 2022

Abstract:Video super-resolution is one of the most popular tasks on mobile devices, being widely used for an automatic improvement of low-bitrate and low-resolution video streams. While numerous solutions have been proposed for this problem, they are usually quite computationally demanding, demonstrating low FPS rates and power efficiency on mobile devices. In this Mobile AI challenge, we address this problem and propose the participants to design an end-to-end real-time video super-resolution solution for mobile NPUs optimized for low energy consumption. The participants were provided with the REDS training dataset containing video sequences for a 4X video upscaling task. The runtime and power efficiency of all models was evaluated on the powerful MediaTek Dimensity 9000 platform with a dedicated AI processing unit capable of accelerating floating-point and quantized neural networks. All proposed solutions are fully compatible with the above NPU, demonstrating an up to 500 FPS rate and 0.2 [Watt / 30 FPS] power consumption. A detailed description of all models developed in the challenge is provided in this paper.

ELSR: Extreme Low-Power Super Resolution Network For Mobile Devices

Aug 31, 2022

Abstract:With the popularity of mobile devices, e.g., smartphone and wearable devices, lighter and faster model is crucial for the application of video super resolution. However, most previous lightweight models tend to concentrate on reducing lantency of model inference on desktop GPU, which may be not energy efficient in current mobile devices. In this paper, we proposed Extreme Low-Power Super Resolution (ELSR) network which only consumes a small amount of energy in mobile devices. Pretraining and finetuning methods are applied to boost the performance of the extremely tiny model. Extensive experiments show that our method achieves a excellent balance between restoration quality and power consumption. Finally, we achieve a competitive score of 90.9 with PSNR 27.34 dB and power 0.09 W/30FPS on the target MediaTek Dimensity 9000 plantform, ranking 1st place in the Mobile AI & AIM 2022 Real-Time Video Super-Resolution Challenge.

AIM 2022 Challenge on Super-Resolution of Compressed Image and Video: Dataset, Methods and Results

Aug 25, 2022

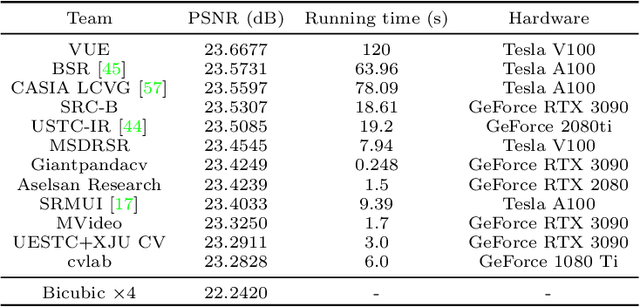

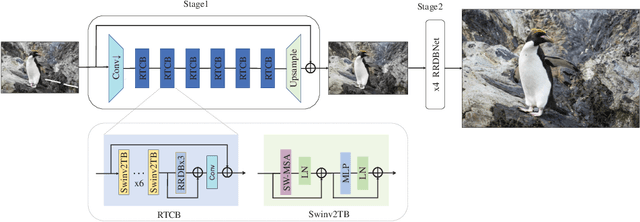

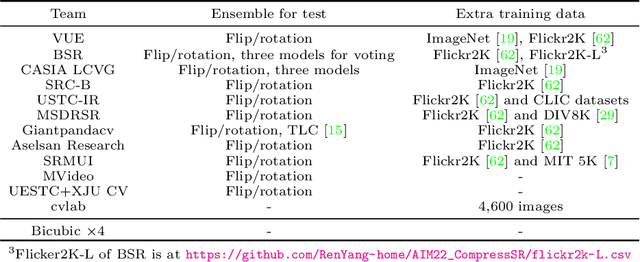

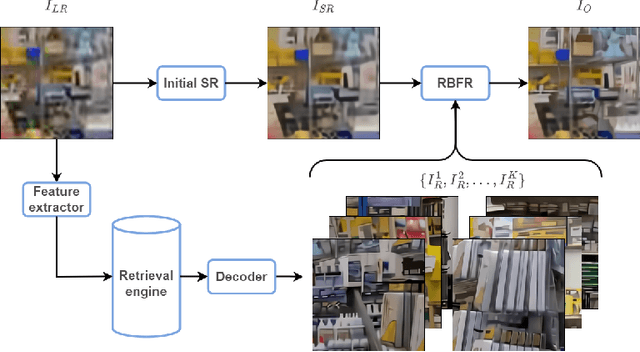

Abstract:This paper reviews the Challenge on Super-Resolution of Compressed Image and Video at AIM 2022. This challenge includes two tracks. Track 1 aims at the super-resolution of compressed image, and Track~2 targets the super-resolution of compressed video. In Track 1, we use the popular dataset DIV2K as the training, validation and test sets. In Track 2, we propose the LDV 3.0 dataset, which contains 365 videos, including the LDV 2.0 dataset (335 videos) and 30 additional videos. In this challenge, there are 12 teams and 2 teams that submitted the final results to Track 1 and Track 2, respectively. The proposed methods and solutions gauge the state-of-the-art of super-resolution on compressed image and video. The proposed LDV 3.0 dataset is available at https://github.com/RenYang-home/LDV_dataset. The homepage of this challenge is at https://github.com/RenYang-home/AIM22_CompressSR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge